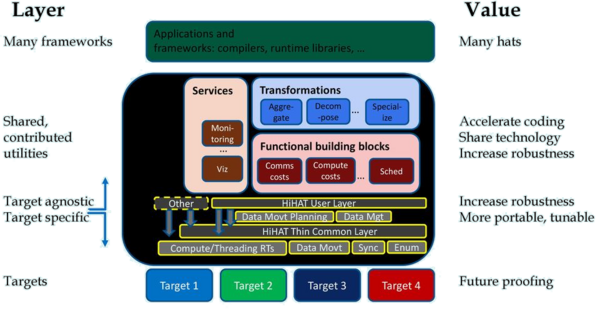

HiHAT SW Stack

This page is dedicated to describing possible components of the HiHAT SW Stack.

A link to get back up to the parent page is here.

Approach

First, let's outline the general approach of HiHAT:

- Create low-level abstractions that expose the goodness of HW platforms

- A bare-bones, minimal "common layer"

- This is as thin, light and low-overhead as it can possibly be, e.g. it makes almost no decisions and does almost no look-ups.

- This layer may not be very human usable. Minimal implies that it is critical to performance.

- There will be upward-looking interfaces exposed to higher layers (runtimes, etc.), and downward-looking interfaces to target-specific glue code.

- Functions of the common layer include

- Multiplexing <target resource, action> tuples to underlying target-specific glue code

- A richer, more usable "user layer" that may have more overheads, that is layered on top of the common layer. The user layer may include convenience functionality that would be useful to many runtimes and clients above it.

- A bare-bones, minimal "common layer"

- Push functionality that is common across HW platforms above the common and user layers. Focus on offering building blocks, services and transforms that would be used by various runtimes that are built on top of them, rather than on trying to get everyone to unify on some single such runtime, which most agree is futile.

- The primary focus for this effort is on supporting tasking runtimes, but the platform-retargetable layers may be relevant to runtimes that have nothing to do with tasking. We are encouraged to tag contributions in this effort as to whether they are applicable beyond just tasking.

- The scope of the HiHAT effort spans on-node and cross-node management. We are encouraged to tag issues are to pertaining to one of {on-node only, cross-node only, or both} where appropriate.

Functionality

Next, let's consider what functionality may be platform specific, that would want to be abstracted by the user and common layers, and how that would be nested. This functionality can be grouped under the head of actions, which have 4 kinds:

- Compute: map work to underlying computing resources, e.g. threads

- Ex: OpenMP, TBB, QThreads, Argobots

- Data movement: move data from a source to a sink, where these may be memory of different kinds, different layers, different NUMA domains, or different address domains. Data movement planning occurs above the common layer, since the choice of how best to move for a given pair of targets and the size and layout of the transfer is a higher-level decision. The lower layer may provides a minimal set of underlying data movement styles, without making any choice about which is most suitable.

- Local

- Direct memory writes and memcpy-like APIs

- DMAs

- Remote

- High-level interfaces like MPI, UPC, *SHMEM

- Mid- and lower-level interfaces like UCX, libfabrics

- IO to storage

- Local

- Data management: operations on memory include allocate, free, pin, materialize, and annotate with various metadata properties

- May include allocation from one or more pools, e.g. different pools for different memory kinds

- May include use of standard libraries like libnuma, or proprietary drivers

- Synchronization: provide completion handles on actions, new actions that combine other completion conditions and/or induce other kinds of dependences

Tan boxes describe the functionality offered by the brown boxes just above them. But the tab boxes also indicate functionality which may be target specific.

Enumeration: Discovery of target platform features, is functionality that also needs to be provided, but it's a query rather than an action.

We'll need to agree on a layering architecture, and interfaces for each of these.

There may be additional platform-specific functionalities of interest

- Fast queues (Carter Edwards)

And there may be other components that are related, e.g. because one or more components in this stack interface with them, but that are not part of this hierarchy

- Resource management (Stephen Olivier)

We will also want to begin to flesh out what each target would need, under the thin common layer, and what key aspects and components of the glue code between that common layer and the target would be.

Illustration

The diagram below suggests one possible arrangement.

Some notes on boxes in this diagram, working bottom up:

- Target: Refers to a particular target architecture, e.g. CPU, GPU, FPGA, ... from a given vendor.

- Use of these targets are not mutually exclusive. In a heterogeneous system, targets of different kinds could be used simultaneously.

- The brown and tan boxes are defined above.

- Functional building blocks

- Cost model for moving data, locally or remotely (comms). This could be a function of size, data layout, data paths, topology, etc.

- Cost model for compute, local or remote. This could be a function of data set size, data layout, computational complexity, and some fudge factor.

- Services

- Monitoring: What happens when, error reporting, frequency of events

- Viz: User interface tools to visualize monitoring data

- Transformations: convert one set of pending actions to another

- Aggregate: Many to fewer, e.g. congeal a set of same-sized tasks into a meta-task, congeal a set of contiguous data transfers into a single larger transfer

- Decompose: Fewer to more, e.g. replacing a monolithic Cholesky with a tiled Cholesky, data partitioning

- Specialize: some to some, e.g. replace a task that expected one data layout for an operand with a different version that ran faster with a different data layout, and an additional action that converts a data collection from one layout to another

- Applications and frameworks

- Other data-parallel runtimes, e.g. Kokkos, Raja, Legion, PaRSEC

- Language runtimes, e.g. for C++

- These runtimes could in turn be layered, e.g. with DARMA on top